Writing the evaluation is the most difficult part of your enquiry. So one way to tackle the evaluation is to think about the different parts of your work in turn: methods, data collection, data analysis and conclusions.

Evaluating your methods

Be objective

Think about the frequency and timing of observations.

- Have you taken enough samples to be representative?

- How did you avoid bias?

- For non-probability sampling, what are the limitations?

- Did interviewees understand the questions?

- How did you deal with non-responders?

Be critical

Be ethical

The best geographical investigations will consider the ethical implications at each stage.

- How did you avoid damaging the environment?

- How did you avoid causing offence or a nuisance?

- Did you have consent to carry out observations?

Evaluating your data collection

Be objective

Consider the type of variables and whether the data follow a normal distribution.

- Is every graph accurate?

- Is your choice of measures of central tendency and dispersion appropriate for the type of data?

Be critical

Validity means the suitability of a technique to answer the question that it was intended to answer

- Is every chart, graph and other data presentation technique valid?

Be ethical

- Have you acknowledged all the sources of secondary data that you have used?

Evaluating your data analysis

Be objective

Statistical tests have different requirements for minimum number of measurements, type of variable and whether a normal distribution is required.

- Can you provide a mathematical justification for your choice of statistical test?

- For qualitative techniques, have you eliminate your own subjectivity as much as you can?

Be critical

Results may not turn out as you expected. Testing may show that your results are not statistically significant. This does not mean that your results are “wrong”.

- Have you tested for statistical significance?

- Have you checked your figures to make sure that your calculations are correct?

Be ethical

- Have you ensured confidentiality and anonymity in analysing the results?

Evaluating your conclusions

A valid conclusion is supported by reliable data obtained using a valid method and based on sound reasoning.

Be objective

- Have you described every single trend, pattern and relationship form your data presentation and analysis?

- Have you considered all three sources of error?

Be critical

- Are all your conclusions supported by the evidence?

- How have you judged that your conclusions are valid?

Be ethical

- Is there a statement which details the ethical considerations of your research?

- How successfully have you minimised the harm that your investigation may have upon the environment and the people in it?

Useful words for your evaluation

Here are some useful words when evaluating your methods.

True value

The true value is the value that would be obtained in an ideal measurement.

Accuracy

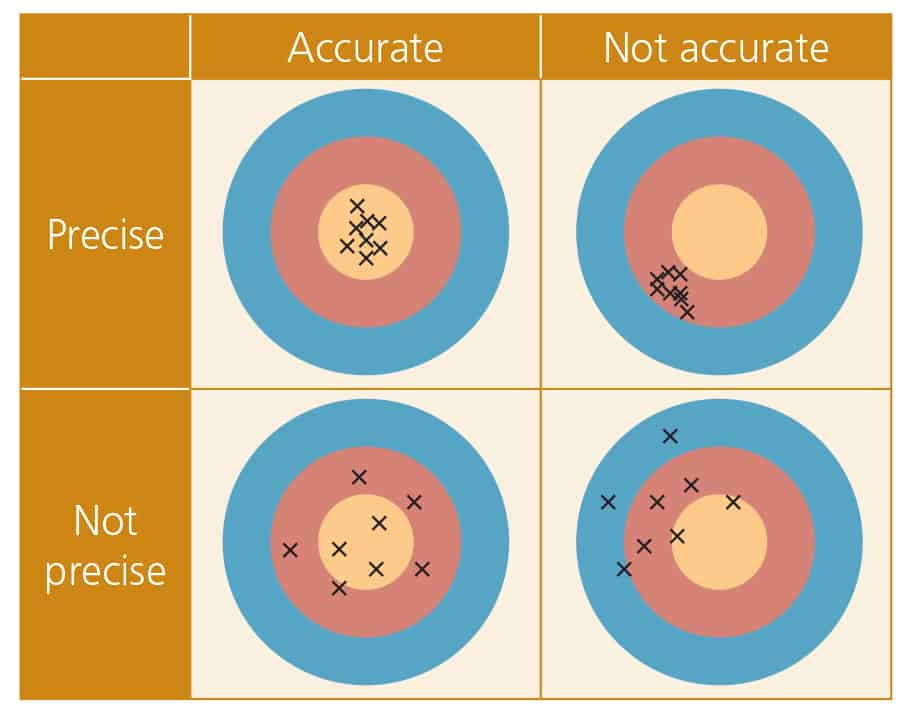

Accuracy means how close a measurement is to the true value.

The closer a measured value is to the true value, the more accurate it is. The further a measured value is from the true value, the greater the error.

Precision

Precise measurements have very little spread about the mean value. A precise measurement is not necessarily accurate.

Errors

An error is the difference between the result that you found and the true value.

There are three possible sources of error:

- Measurement error: mistakes made when collecting the data, such as a student

mis-reading a thermometer. - Operator error: differences in the results collected by different people, such as different people giving different scores.

- Sampling error: local differences meaning that one sample gives slightly different results to another.

These can produce two possible types of error:

Random error: these cause results to be spread about the true value. For example, imagine a student takes 20 temperature readings and mis-reads the thermometer for 2 of the readings. The effect of random errors can be reduced by taking more measurements.

Systematic error: these cause results to differ from the true value by a consistent amount each time the measurement is made. For example, imagine a student uses weighing scales which have not been zeroed, so all the results are 10g too high. The effect of systematic errors cannot be reduced by taking more measurements.

Anomalies

These are values in a set of results which are judged not to be part of the variation caused by random uncertainty.

Validity

The suitability of the method to answer the question that it was intended to answer.

Reliability

This is the extent to which measurements are consistent.

Secondary and Further Education Courses

Set your students up for success with our secondary school trips and courses. Offering excellent first hand experiences for your students, all linked to the curriculum.

Group Leader and Teacher Training

Centre-based and digital courses for teachers

Experiences for Young People

Do you enjoy the natural world and being outdoors? Opportunities for Young People aged 16-25.